环境: 基于centos 7.5,CDH 6.3.1

一.安装kerberos

kerberso是什么

之前没有接触过kerberos,最近项目需要基于CDH的多租户管理,所以简单了解了下。kerberos要做的工作就是验证进行权限申请的人是他声称的那个人的问题。

Kerberos协议:

Kerberos协议主要用于计算机网络的身份鉴别(Authentication), 其特点是用户只需输入一次身份验证信息就可以凭借此验证获得的票据

(ticket-granting ticket)访问多个服务,即SSO(Single Sign On)。由于在每个Client和Service之间建立了共享密钥,使得该协

议具 有相当的安全性。

一句话来概括kerberos是用来做什么的:

“kerberos主要是用来做网络通信时候的身份认证(Authentication)”

kerberos的简单原理图如下,kerberos的原理实现远远复杂的多。

kerberos原理介绍参考资料:简书

1.安装KDC服务

可以将KDC安装在集群内也可以安装在单独的机器。这里安装到CM(h1)上

安装命令:

1

2

|

(base) [root@h1 ~]# yum -y install krb5-server krb5-libs krb5-auth-dialog krb5-workstation

|

2.修改kdc的配置文件

1

2

|

(base) [root@h1 ~]# vim /etc/kbc5.conf

|

将以下内容粘贴进去

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

pkinit_anchors = /etc/pki/tls/certs/ca-bundle.crt

default_realm = CAT.COM

#default_ccache_name = KEYRING:persistent:%{uid}

[realms]

CAT.COM = {

kdc = master

admin_server = master

}

[domain_realm]

.cat.com = CAT.COM

cat.com = CAT.COM

|

*cat.com/CAT.COM。 为自定义名称

*默认ticket有效时常为24H,续约时长为7D,每次的续约要在有效期内进行续约,如24小时ticket时长,需要在24小时内进行续约。否则将会过期无法续约。

续约方法:

sudo到需要续约的用户下,并且使用kinit username进行登录。

kinit -R进行续约 然后用 klist 查看过期时间。

3 修改/var/kerberos/krb5kdc/kadm5.acl

1

2

3

4

5

|

(base) [root@h1 ~]# vim /var/kerberos/krb5kdc/kadm5.acl

*/admin@HADOOP.COM *

*/master@HADOOP.COM *

|

4 修改 /var/kerberos/krb5kdc/kdc.conf

1

2

|

(base) [root@h1 ~]# vim /var/kerberos/krb5kdc/kdc.conf

|

将以下内容复制

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

CAT.COM = {

#master_key_type = aes256-cts

#ticket_lifetime = 10m

max_renewable_life= 7d 0h 0m 0s

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

|

5 创建Kerberos数据库

创建命令:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

(base) [root@h1 ~]# kdb5_util create –r CAT.COM -s

Loading random data

Initializing database '/var/kerberos/krb5kdc/principal' for realm 'CAT.COM',

master key name 'K/M@CAT.COM'

You will be prompted for the database Master Password.

It is important that you NOT FORGET this password.

Enter KDC database master key:

Re-enter KDC database master key to verify:

#此处需要输入Kerberos数据库的密码。

|

6 创建Kerberos的管理账号

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

(base) [root@h1 ~]# kadmin.local

Authenticating as principal root/admin@CAT.COM with password.

kadmin.local: addprinc admin/admin@CAT.COM

WARNING: no policy specified for admin/admin@CAT.COM; defaulting to no policy

Enter password for principal "admin/admin@CAT.COM":

Re-enter password for principal "admin/admin@CAT.COM":

Principal "admin/admin@CAT.COM" created.

kadmin.local: exit

|

7.启动krb5dc,kadmin,并设置kerberos开机自启

启动krb5dc,kadmin

1

2

3

4

5

|

[root@h1 ~]# systemctl enable kadmin

Created symlink from /etc/systemd/system/multi-user.target.wants/kadmin.service to /usr/lib/systemd/system/kadmin.service.

[root@h1 ~]# systemctl start krb5kdc

[root@h1 ~]# systemctl start kadmin

|

设置开机自启

1

2

|

[root@h1 ~]# systemctl enable krb5kdc

|

8.集群其他机器安装Kerberos客户端,包括KDC机器

1

2

3

4

|

[root@h2 ~]#yum -y install krb5-libs krb5-workstation

[root@h3 ~]#yum -y install krb5-libs krb5-workstation

[root@h4 ~]#yum -y install krb5-libs krb5-workstation

|

9 在CM服务器上安装额外的包

1

2

|

[root@h1 ~]# yum -y install openldap-clients

|

10 将KDC server中的krb5.conf拷贝到集群所有机器的/etc/

1

2

|

scp /etc/krb5.conf root@h2:/etc/

|

11 在KDC中给Cloudera Manager添加管理员账号

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

(base) [root@h2 ~]# kadmin.local

Authenticating as principal admin/admin@CAT.COM with password.

kadmin.local: addprinc cloudera-scm/admin@CAT.COM

WARNING: no policy specified for cloudera-scm/admin@CAT.COM; defaulting to no policy

Enter password for principal "cloudera-scm/admin@CAT.COM":

Re-enter password for principal "cloudera-scm/admin@CAT.COM":

Principal "cloudera-scm/admin@CAT.COM" created.

kadmin.local: exit

|

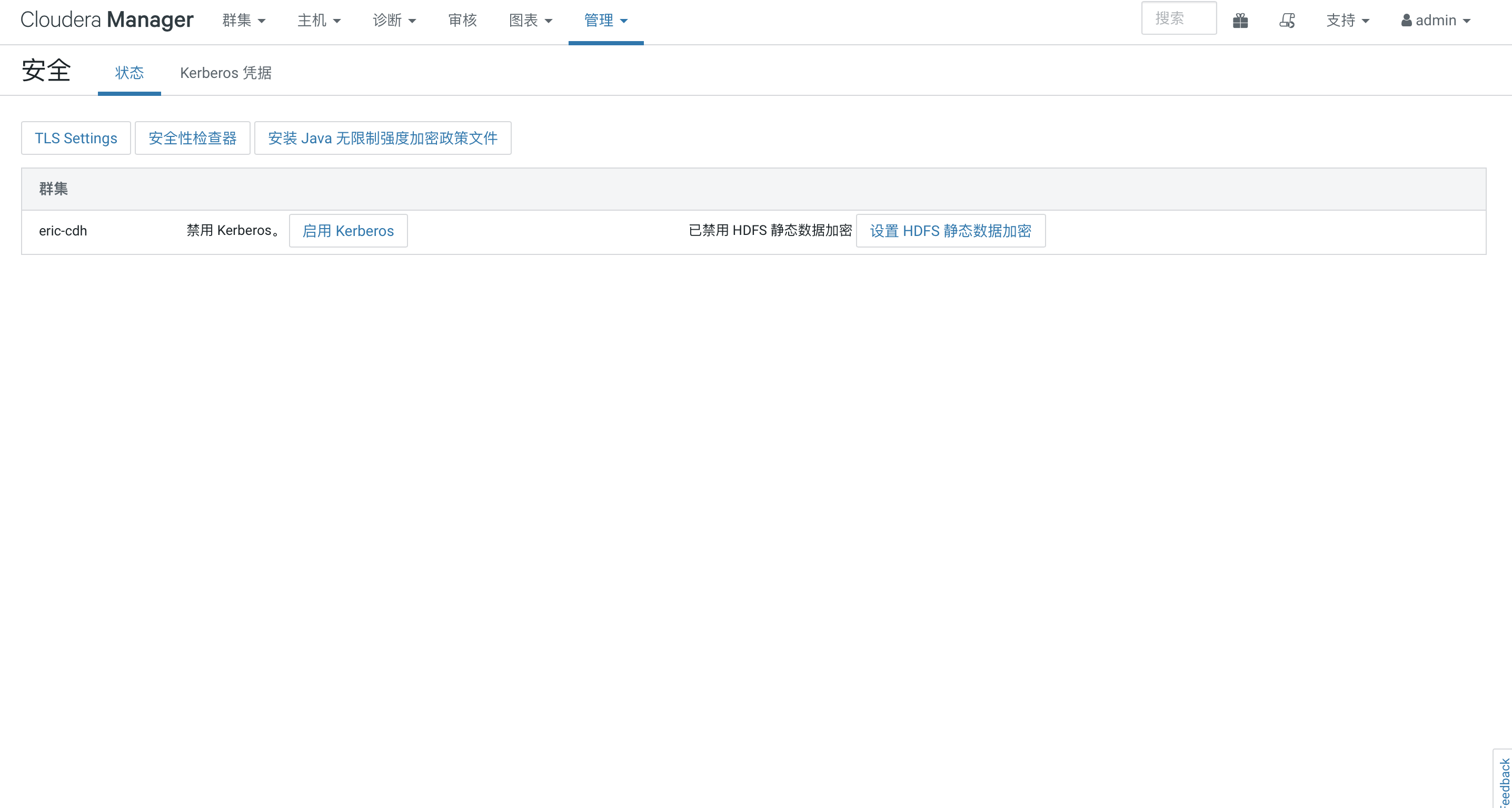

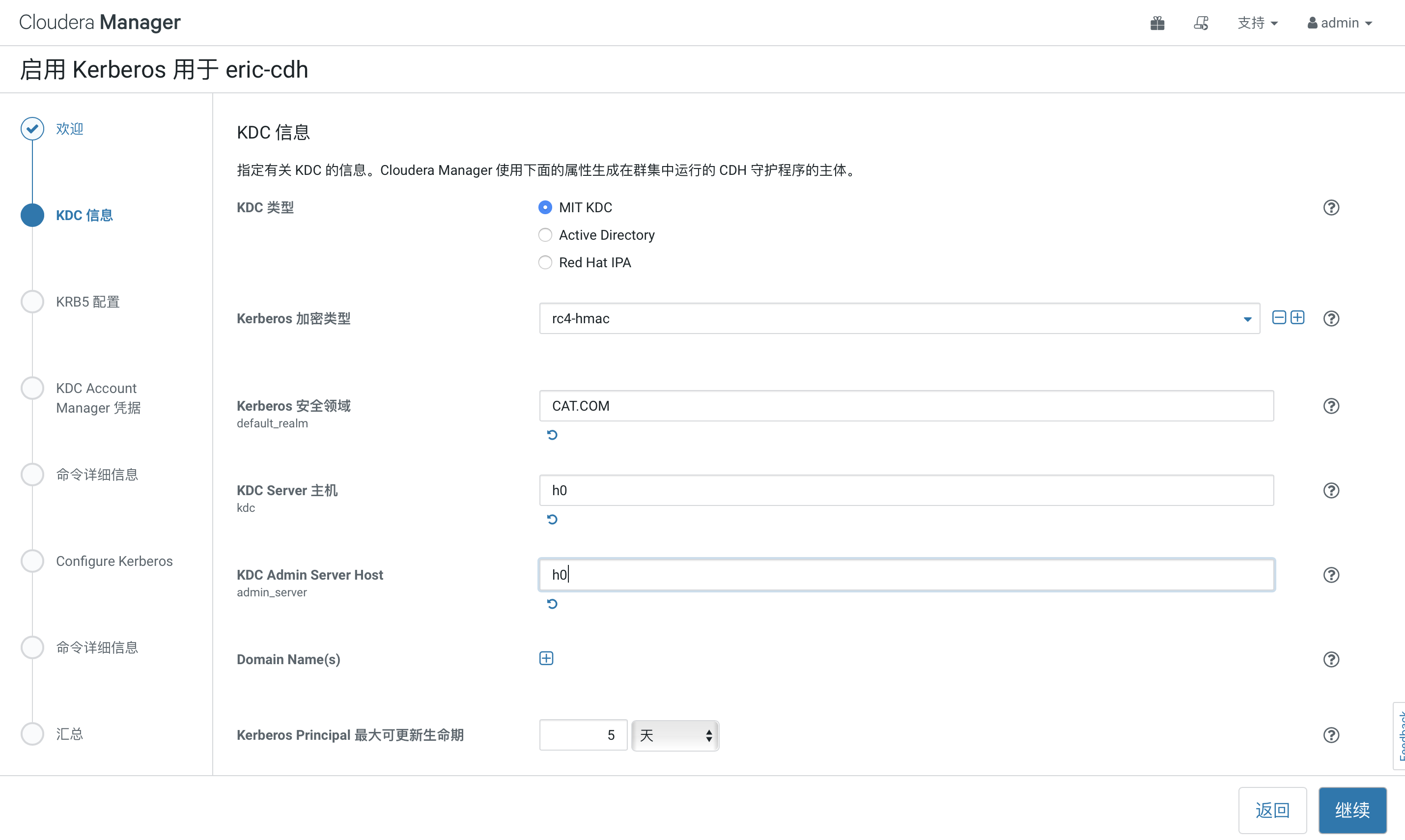

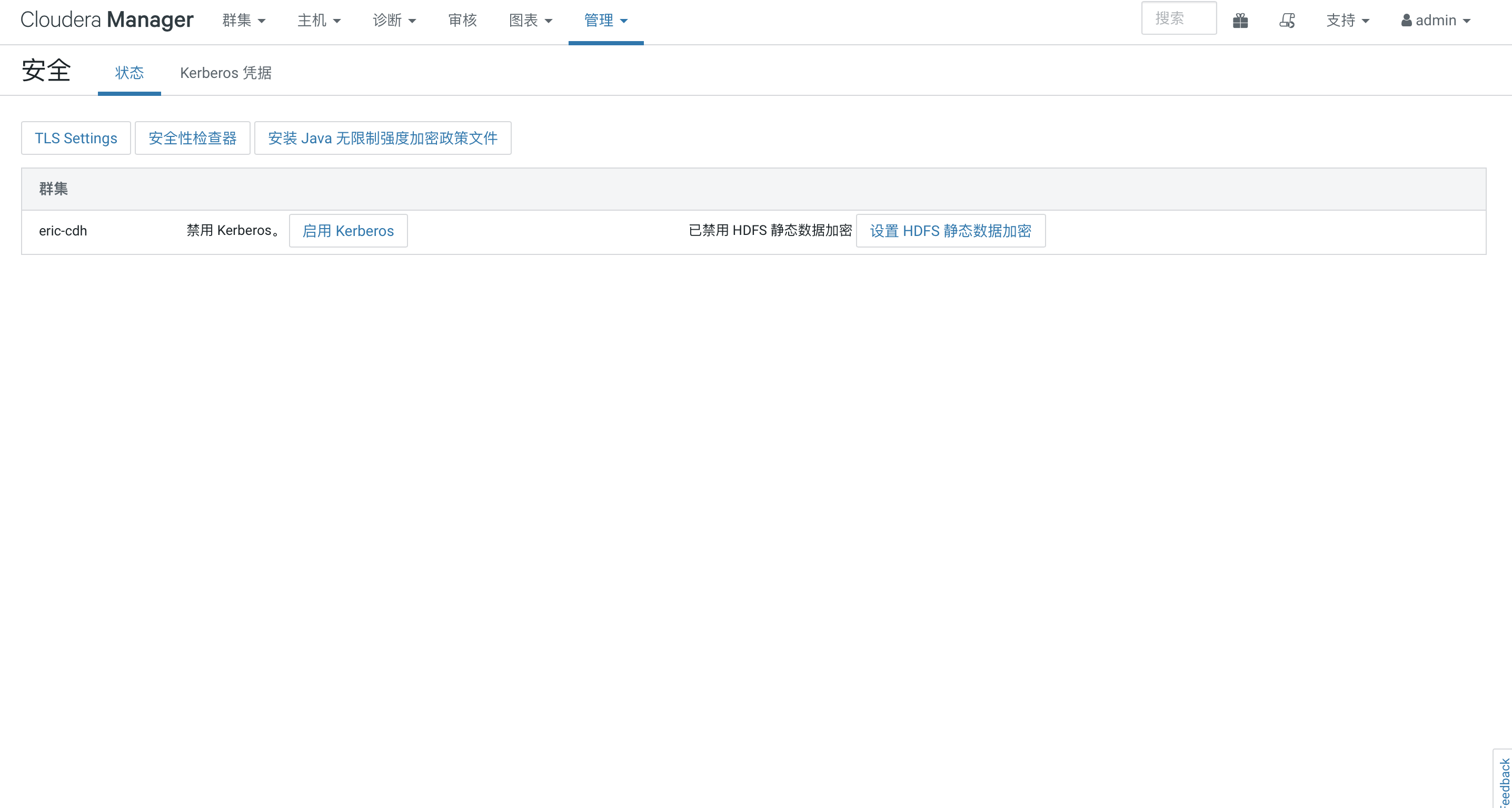

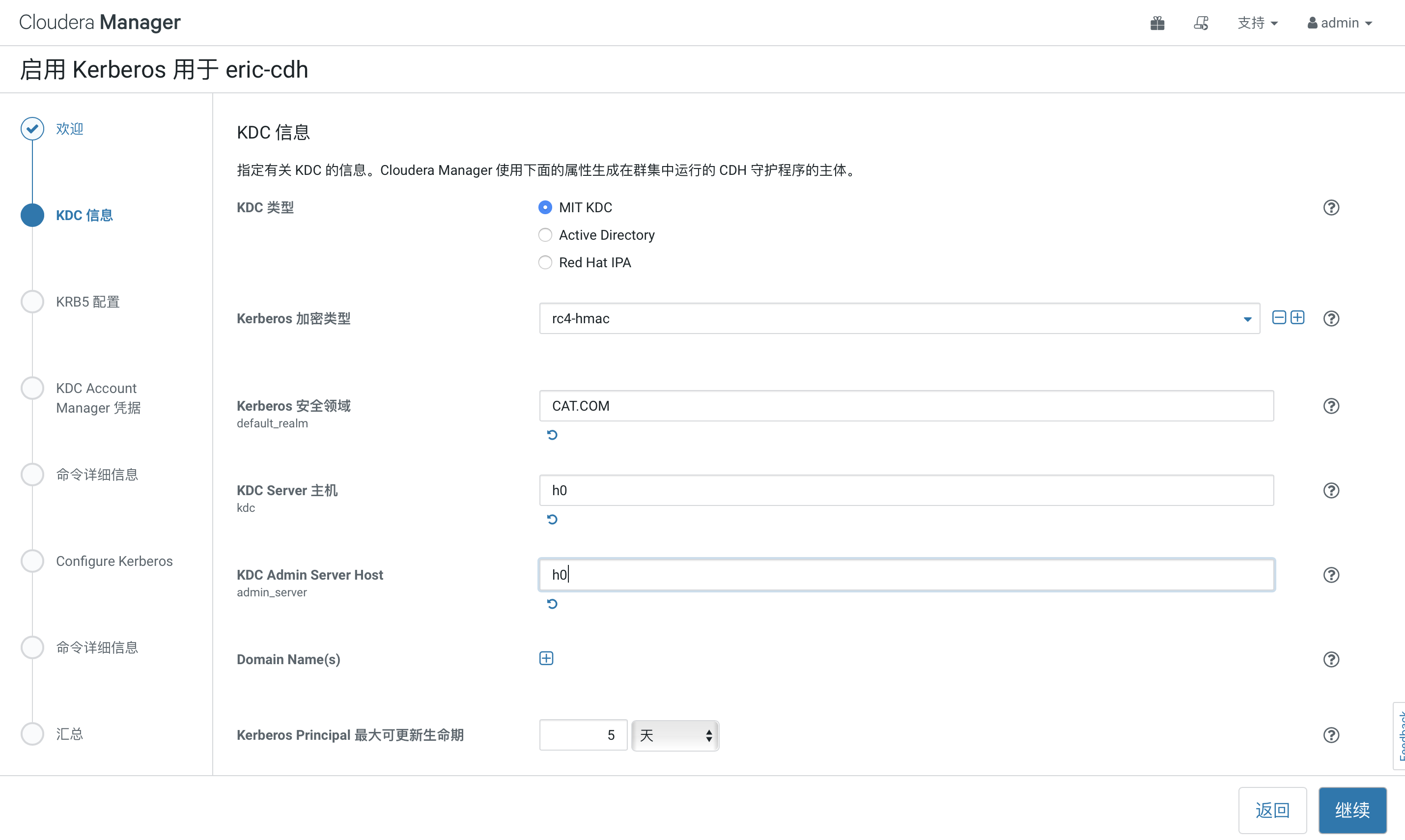

二.进入CM管理页面配置kerberos

选择管理>安全>启用kerberos

全部勾选

KDCserver和KDC admin server选择自己的KDC安装机器hostname

KDCserver和KDC admin server选择自己的KDC安装机器hostname

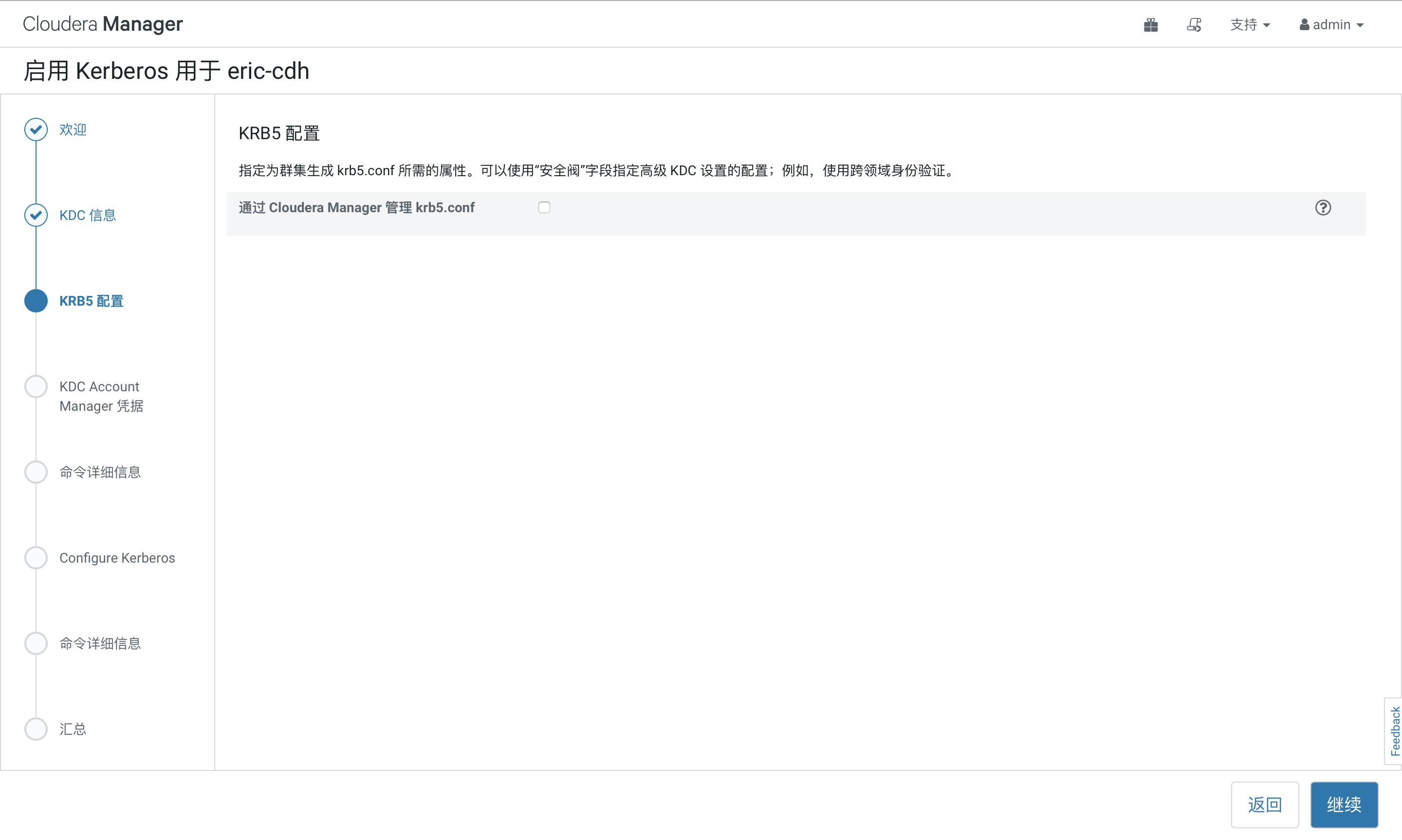

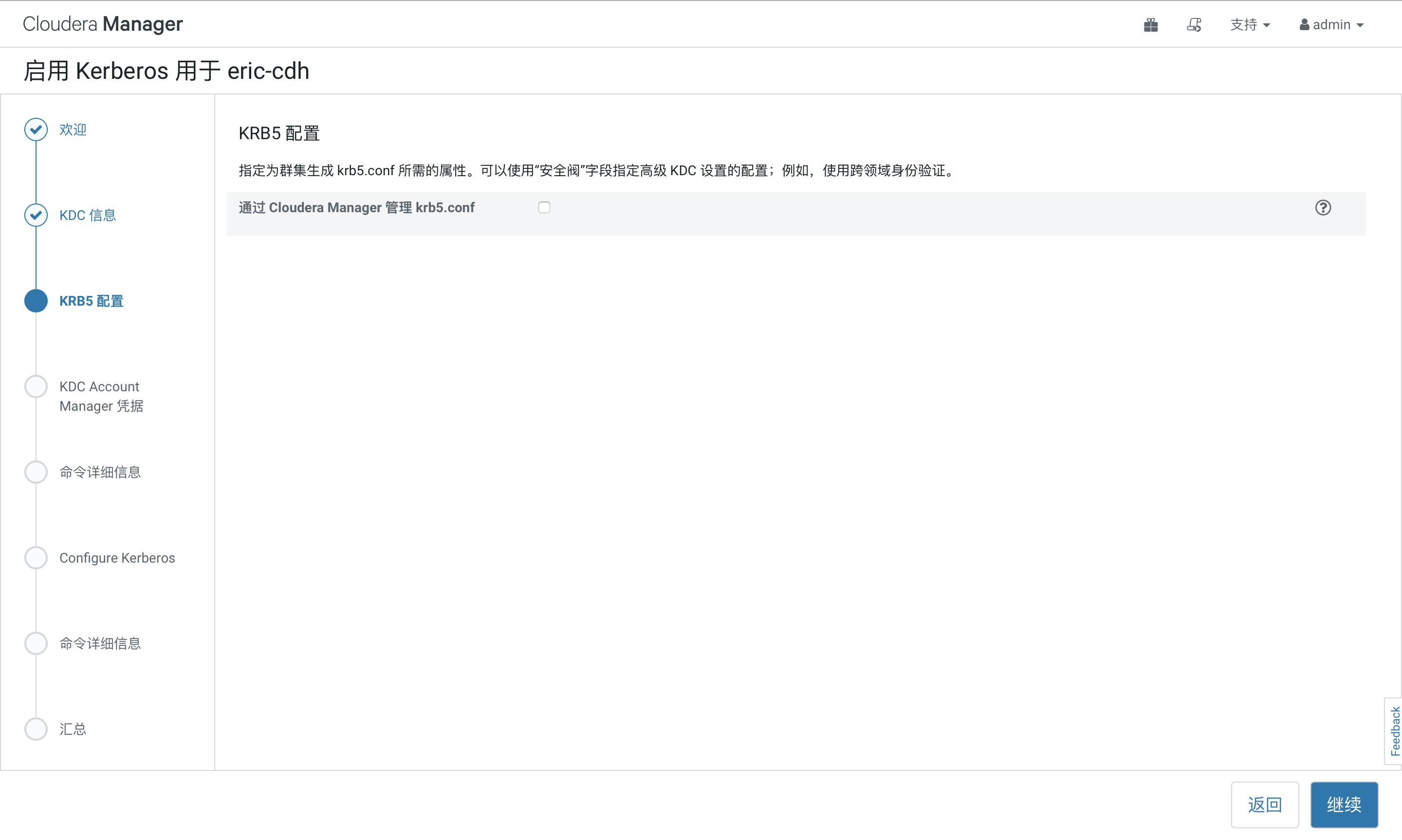

不勾选

不勾选

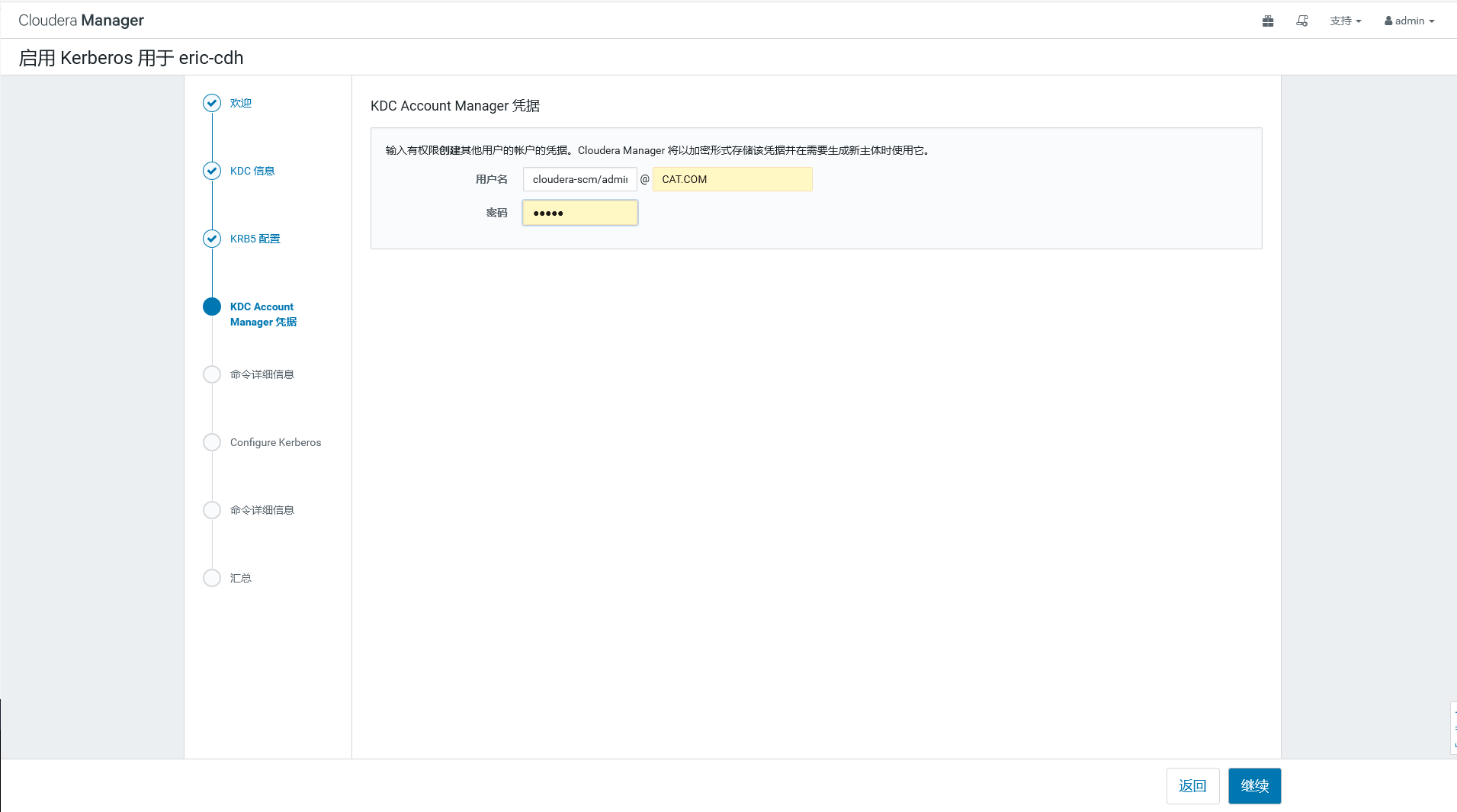

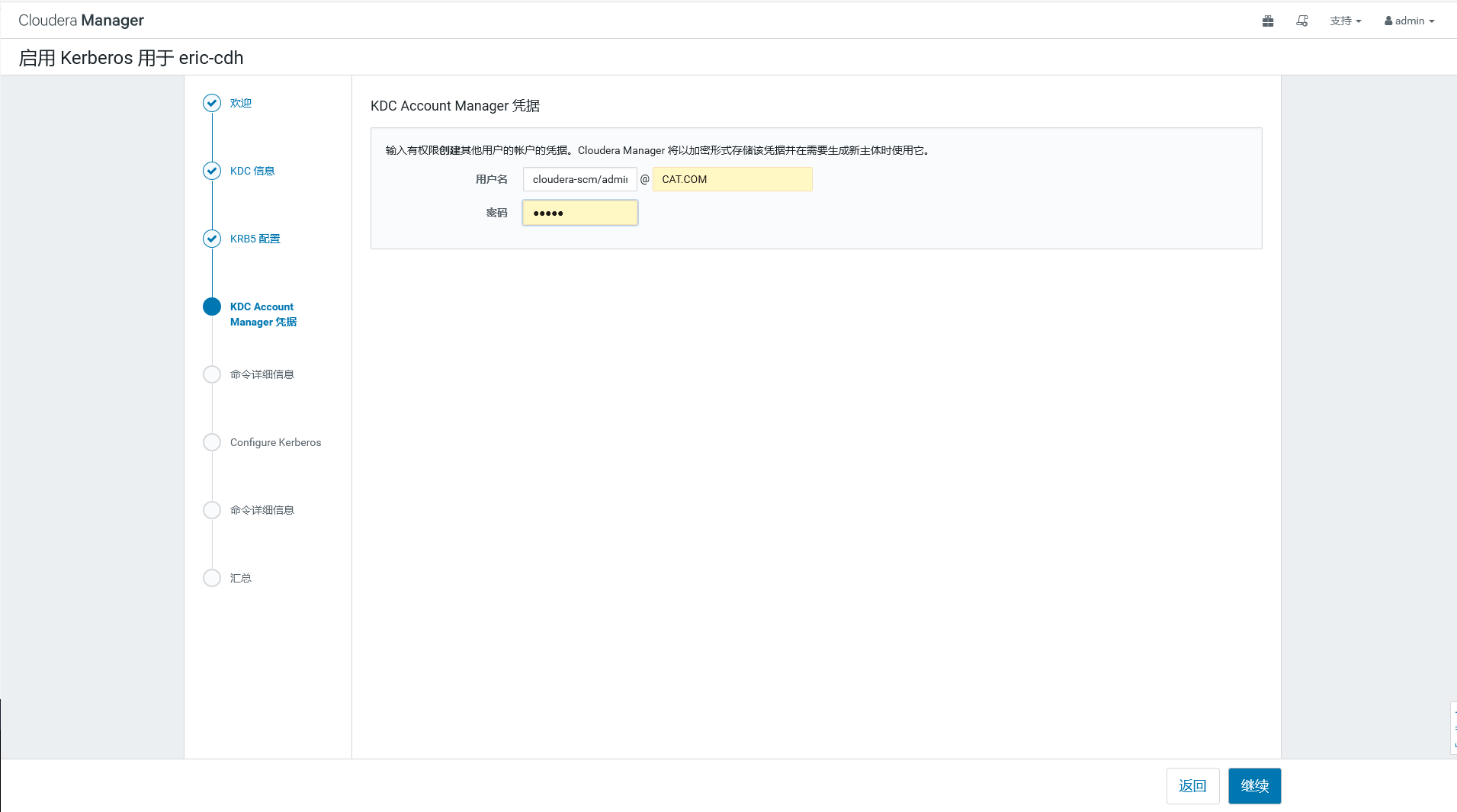

填写上面11步设置的用户和密码即可,然后一路确认。s

填写上面11步设置的用户和密码即可,然后一路确认。s

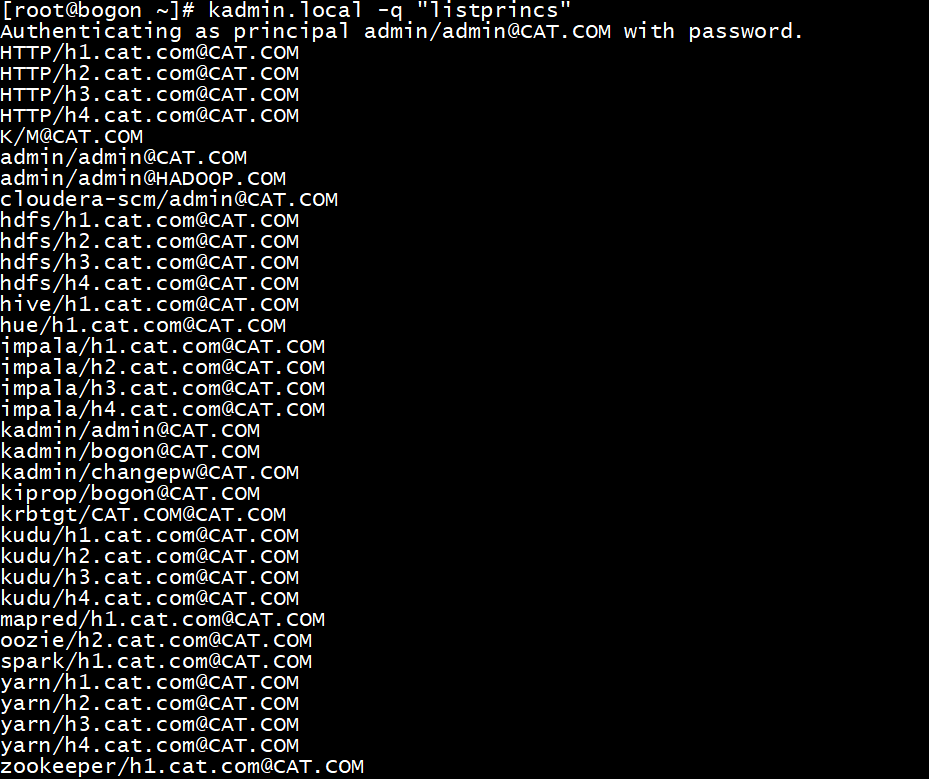

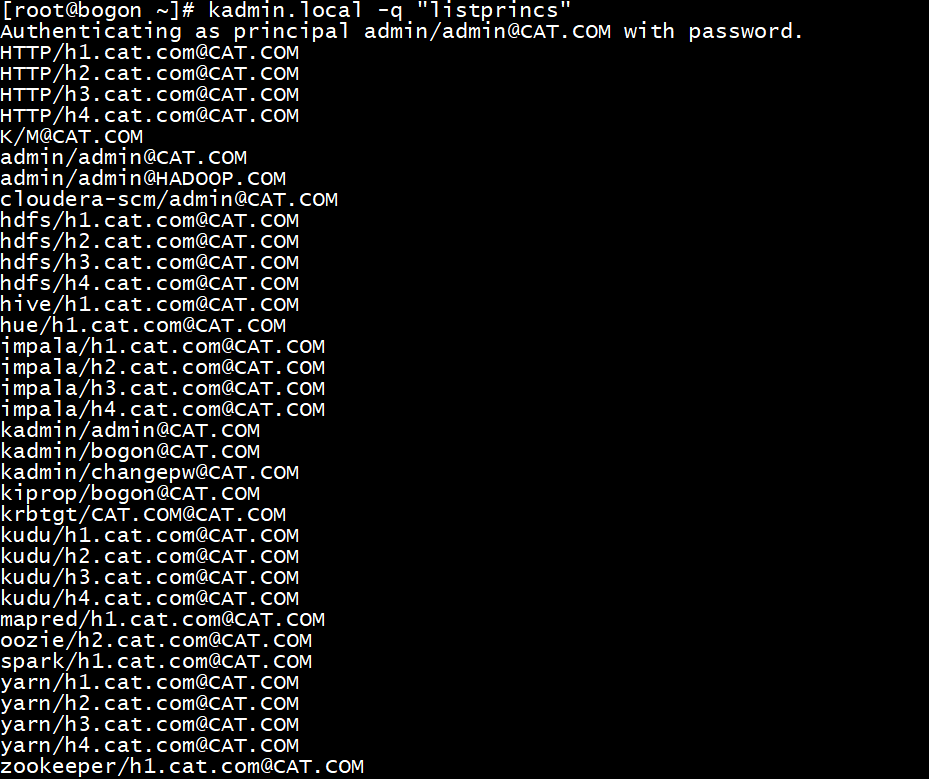

完成之后Kerberos会创建CDH相关用户,使用命令查看当前的所有kerberos用户,不过这些自动创建的用户不知道如何登陆,因为没有密码(后来发现要用不用角色的keytab登陆)

完成之后Kerberos会创建CDH相关用户,使用命令查看当前的所有kerberos用户,不过这些自动创建的用户不知道如何登陆,因为没有密码(后来发现要用不用角色的keytab登陆)

三.kerberos的使用

创建user1测试用户,执行Hive和MapReduce任务,需要在集群所有节点创建user1用户

1.使用kadmin创建一个user1的principal

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

(base) [root@h1 ~]# kadmin.local

Authenticating as principal admin/admin@CAT.COM with password.

kadmin.local: addprinc user1@CAT.COM

WARNING: no policy specified for user1@CAT.COM; defaulting to no policy

Enter password for principal "user1@CATCOM":

Re-enter password for principal "user1@CAT.COM":

Principal "user1@CAT.COM" created.

kadmin.local: exit

|

2.使用user1用户登录Kerberos

1

2

3

4

5

6

7

8

9

10

11

|

[root@h1 ~]# kdestroy

[root@h1 ~]# kinit user1

#设置user1登陆密码

Password for user1@CAT.COM:

#查看user1的有效期

(base) [root@h1 ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: user1@CAT.COM

Valid starting Expires Service principal

12/26/2019 18:29:17 12/27/2019 18:29:17 krbtgt/CAT.COM@CAT.COM

renew until 01/03/2019 18:29:17

|

3.在集群所有节点添加user1用户

一定要在所有机器添加,因为任务是分布式的,比如yarn和mr会分配container给不同的机器,不然跑任务会报错。

1

2

3

4

|

[root@h1 ~]#useradd user1

[root@h2 ~]#useradd user1

[root@h3 ~]#useradd user1

|

- 把user1用户添加到hdfs,hadoop用户组中

1

2

3

|

[root@h1 ~]# usermod -G hdfs,hadoop user1

[root@h1 ~]# usermod -G hadoop user1

|

4.运行MapReduce作业

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

|

#先用user1登陆kerberos

[root@h1 conf]# su - user1

[root@h1 conf]# kinit user1

Password for user1@CAT.COM:

[root@h1 conf]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: user1@CAT.COM

Valid starting Expires Service principal

12/30/2019 22:27:40 12/31/2019 22:27:40 krbtgt/CAT.COM@CAT.COM

renew until 01/06/2020 22:27:40

#测试mr demo

[root@h1 conf]# hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi 10 1

WARNING: Use "yarn jar" to launch YARN applications.

Number of Maps = 10

Samples per Map = 1

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Wrote input for Map #5

Wrote input for Map #6

Wrote input for Map #7

Wrote input for Map #8

Wrote input for Map #9

Starting Job

19/12/30 22:32:49 INFO client.RMProxy: Connecting to ResourceManager at h1.cat.com/192.168.1.171:8032

19/12/30 22:32:50 INFO hdfs.DFSClient: Created token for user2: HDFS_DELEGATION_TOKEN owner=user2@CAT.COM, renewer=yarn, realUser=, issueDate=1577716370019, maxDate=1578321170019, sequenceNumber=4, masterKeyId=10 on 192.168.1.171:8020

19/12/30 22:32:50 INFO security.TokenCache: Got dt for hdfs://h1.cat.com:8020; Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.1.171:8020, Ident: (token for user2: HDFS_DELEGATION_TOKEN owner=user2@CAT.COM, renewer=yarn, realUser=, issueDate=1577716370019, maxDate=1578321170019, sequenceNumber=4, masterKeyId=10)

19/12/30 22:32:50 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/user2/.staging/job_1577701614246_0003

19/12/30 22:32:50 INFO input.FileInputFormat: Total input files to process : 10

19/12/30 22:32:50 INFO mapreduce.JobSubmitter: number of splits:10

19/12/30 22:32:50 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

19/12/30 22:32:50 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1577701614246_0003

19/12/30 22:32:50 INFO mapreduce.JobSubmitter: Executing with tokens: [Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.1.171:8020, Ident: (token for user2: HDFS_DELEGATION_TOKEN owner=user2@CAT.COM, renewer=yarn, realUser=, issueDate=1577716370019, maxDate=1578321170019, sequenceNumber=4, masterKeyId=10)]

19/12/30 22:32:51 INFO conf.Configuration: resource-types.xml not found

19/12/30 22:32:51 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

19/12/30 22:32:51 INFO impl.YarnClientImpl: Submitted application application_1577701614246_0003

19/12/30 22:32:51 INFO mapreduce.Job: The url to track the job: http://h1.cat.com:8088/proxy/application_1577701614246_0003/

19/12/30 22:32:51 INFO mapreduce.Job: Running job: job_1577701614246_0003

19/12/30 22:33:04 INFO mapreduce.Job: Job job_1577701614246_0003 running in uber mode : false

19/12/30 22:33:04 INFO mapreduce.Job: map 0% reduce 0%

19/12/30 22:33:14 INFO mapreduce.Job: map 20% reduce 0%

19/12/30 22:33:19 INFO mapreduce.Job: map 30% reduce 0%

19/12/30 22:33:20 INFO mapreduce.Job: map 40% reduce 0%

19/12/30 22:33:24 INFO mapreduce.Job: map 50% reduce 0%

19/12/30 22:33:25 INFO mapreduce.Job: map 60% reduce 0%

19/12/30 22:33:30 INFO mapreduce.Job: map 80% reduce 0%

19/12/30 22:33:35 INFO mapreduce.Job: map 100% reduce 0%

19/12/30 22:33:41 INFO mapreduce.Job: map 100% reduce 100%

19/12/30 22:33:42 INFO mapreduce.Job: Job job_1577701614246_0003 completed successfully

19/12/30 22:33:42 INFO mapreduce.Job: Counters: 54

File System Counters

FILE: Number of bytes read=49

FILE: Number of bytes written=2433690

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

......................

Job Finished in 53.382 seconds

Estimated value of Pi is 3.60000000000000000000

|

测试连接 beelin

1

2

3

4

5

6

7

|

beeline> !connect jdbc:hive2://localhost:10000/;principal=hive/h1@CAT.COM

Connecting to jdbc:hive2://localhost:10000/;principal=hive/h1@CAT.COM

Connected to: Apache Hive (version 2.1.1-cdh6.3.1)

Driver: Hive JDBC (version 2.1.1-cdh6.3.1)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://localhost:10000/>

|

以上就完成了kerberos的设置

四.下面我们来设置下kerberos hive环境

需要完成以下设置,才可以使用hive

- 不然可能会报下面的错误。

- 两种情况下会报以下错误:

1.ticket过期没有续约情况下

2.没有生成keytab

“Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]; Host Details : local host is: “h1.cat.com/192.168.1.171”; destination host is: “h1.cat.com”:8020; "

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

[root@h1 ~]# hive

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/jars/hive-common-2.1.1-cdh6.3.1.jar!/hive-log4j2.properties Async: false

Exception in thread "main" java.lang.RuntimeException: java.io.IOException: Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]; Host Details : local host is: "h1.cat.com/192.168.1.171"; destination host is: "h1.cat.com":8020;

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:604)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:545)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:763)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.IOException: Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]; Host Details : local host is: "h1.cat.com/192.168.1.171"; destination host is: "h1.cat.com":8020;

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:808)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1503)

at org.apache.hadoop.ipc.Client.call(Client.java:1445)

at org.apache.hadoop.ipc.Client.call(Client.java:1355)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy28.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:875)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy29.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1630)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1496)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1493)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1508)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1617)

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:712)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:650)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:580)

... 9 more

Caused by: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:756)

|

1.生成 keytab

在 KDC server 节点上执行下面命令:

生成集群所有机器的keytab 汇总后发送给集群每个机器。

1

2

3

4

5

6

7

8

9

10

|

cd /var/kerberos/krb5kdc/

kadmin.local -q "addprinc -randkey hive/h1.cat.com@CAT.COM "

kadmin.local -q "addprinc -randkey hive/h2.cat.com@CAT.COM "

kadmin.local -q "addprinc -randkey hive/h3.cat.com@CAT.COM "

kadmin.local -q "xst -k hive.keytab hive/h1.cat.com@CAT.COM "

kadmin.local -q "xst -k hive.keytab hive/h2.cat.com@CAT.COM "

kadmin.local -q "xst -k hive.keytab hive/h3.cat.com@CAT.COM "

|

*h1.cat.com为hostname,CAT.COM为kerber设置的安全域,在前面设置的。

2 拷贝 hive.keytab 文件到其他节点的 /etc/hive/conf 目录

1

2

3

|

$ scp hive.keytab cdh1:/etc/hive/conf

$ scp hive.keytab cdh2:/etc/hive/conf

$ scp hive.keytab cdh3:/etc/hive/conf

|

并设置权限,分别在 cdh1、cdh2、cdh3 上执行:

1

2

3

4

|

$ ssh cdh1 "cd /etc/hive/conf/;chown hive:hadoop hive.keytab ;chmod 400 *.keytab"

$ ssh cdh2 "cd /etc/hive/conf/;chown hive:hadoop hive.keytab ;chmod 400 *.keytab"

$ ssh cdh3 "cd /etc/hive/conf/;chown hive:hadoop hive.keytab ;chmod 400 *.keytab"

|

有了keytab 相当于有了永久凭证,不需要提供密码(如果修改 kdc 中的 principal 的密码,则该 keytab 就会失效),所以其他用户如果对该文件有读权限,就可以冒充 keytab 中指定的用户身份访问 hadoop,所以 keytab 文件需要确保只对 owner 有读权限(0400)

1

2

3

4

|

[user1@h1 ~]$ kinit -kt hive.keytab hive/h1

[user1@h1 ~]$ hive

|

五.问题与总结

1.连接beelin遇到的错误

问题貌似出在:

1.没有把用户加到机器

2.没有把用户添加到hdfs,hadoop用户组中

1

2

3

4

5

6

7

8

9

10

11

12

|

beeline> !connect jdbc:hive2://h1:10000/;principal=hive/h1@CAT.COM

Connecting to jdbc:hive2://h1:10000/;principal=hive/h1@CAT.COM

19/12/30 17:36:50 [main]: WARN jdbc.HiveConnection: Failed to connect to h1:10000

Could not open connection to the HS2 server. Please check the server URI and if the URI is correct, then ask the administrator to check the server status.

Error: Could not open client transport with JDBC Uri: jdbc:hive2://h1:10000/;principal=hive/h1@CAT.COM: java.net.ConnectException: Connection refused (Connection refused) (state=08S01,code=0)

beeline> !connect jdbc:hive2://h1:10000/;principal=hive/h1@CAT.COM

Connecting to jdbc:hive2://h1:10000/;principal=hive/h1@CAT.COM

19/12/30 19:10:22 [main]: ERROR transport.TSaslTransport: SASL negotiation failure

javax.security.sasl.SaslException: GSS initiate failed

|

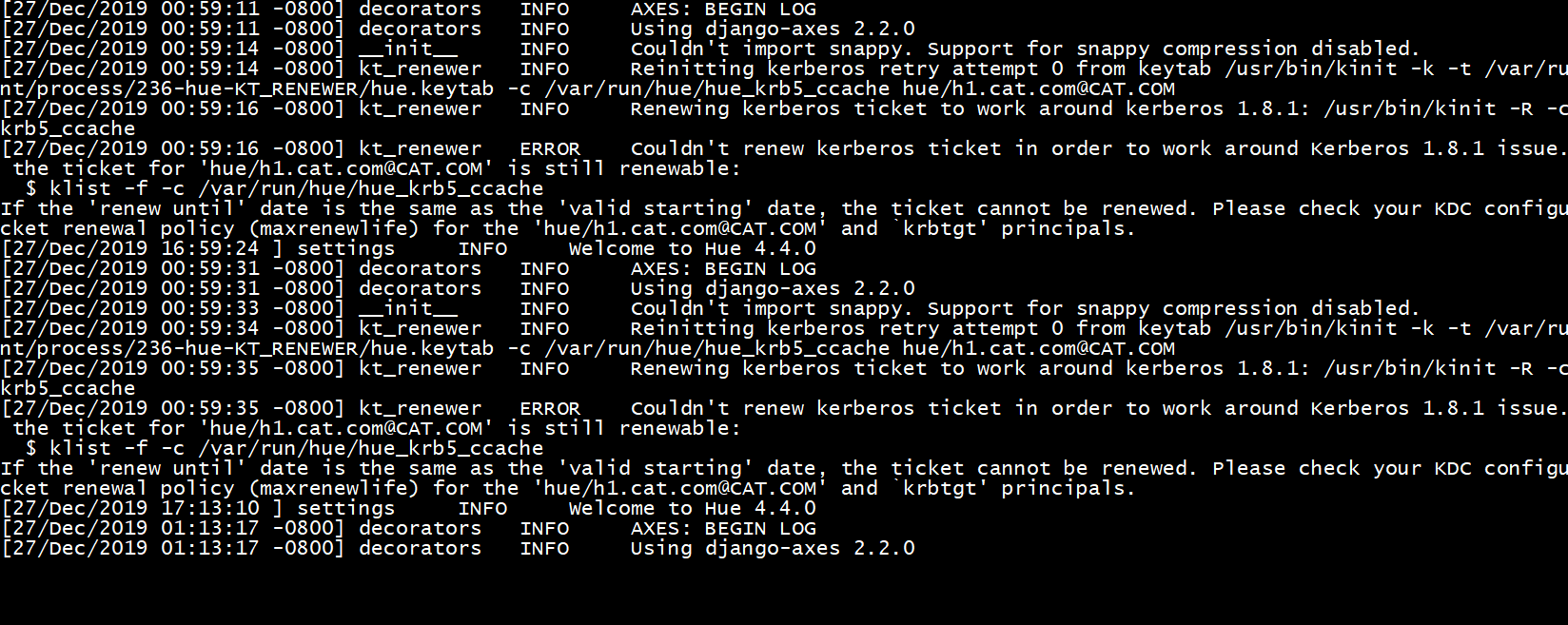

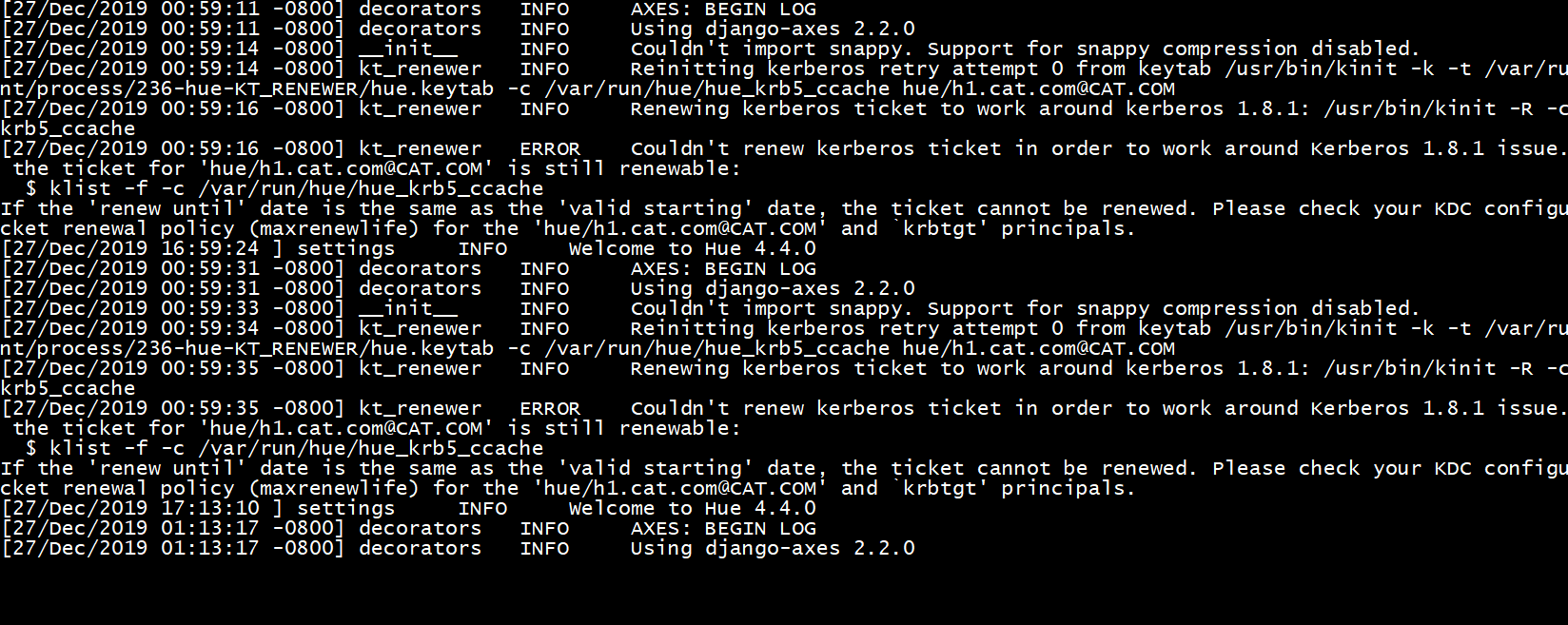

2.hue报错,Kerberos Ticket Renewer无法启动

错误:

1

2

3

4

5

6

7

8

|

INFO kt_renewer

Renewing kerberos ticket to work around kerberos 1.8.1: /bin/kinit -R -c /var/run/hue/hue_krb5_ccache

ERROR kt_renewer Couldn't renew kerberos ticket in order to work around Kerberos 1.8.1 issue. Please check that the ticket for 'hue/$server1@ANYTHING.COM' is still renewable:

$ klist -f -c /var/run/hue/hue_krb5_ccache

If the 'renew until' date is the same as the 'valid starting' date, the ticket cannot be renewed. Please check your KDC configuration, and the ticket renewal policy (maxrenewlife) for the 'hue/$server1@ANYTHING.COM' and `krbtgt' principals.

[19/Feb/2019 07:32:04 ] settings INFO Welcome to Hue 3.9.0

|

原因是KDC配置有一项配置有问题

/var/kerberos/krb5kdc/kdc.conf

修改后重启就好。 注释 #ticket_lifetime = 10m 不应该写。ticket相关设置在client中设置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

CAT.COM = {

#master_key_type = aes256-cts

#ticket_lifetime = 10m

max_renewable_life= 7d 0h 0m 0s

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal ca

mellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

|

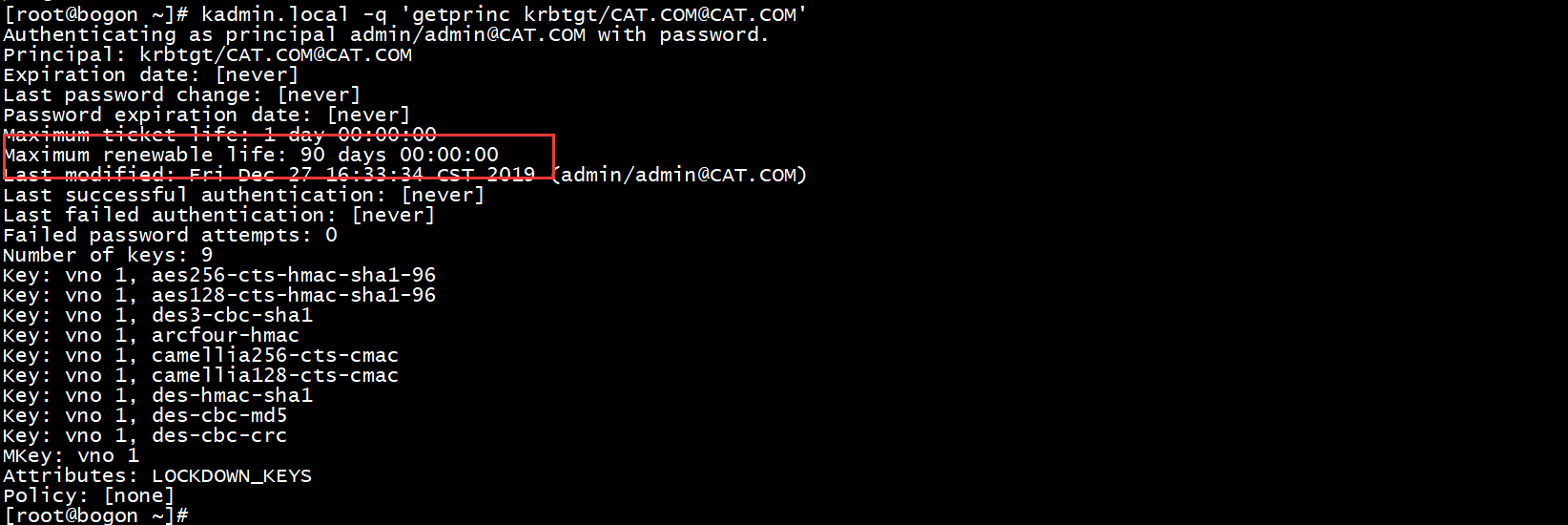

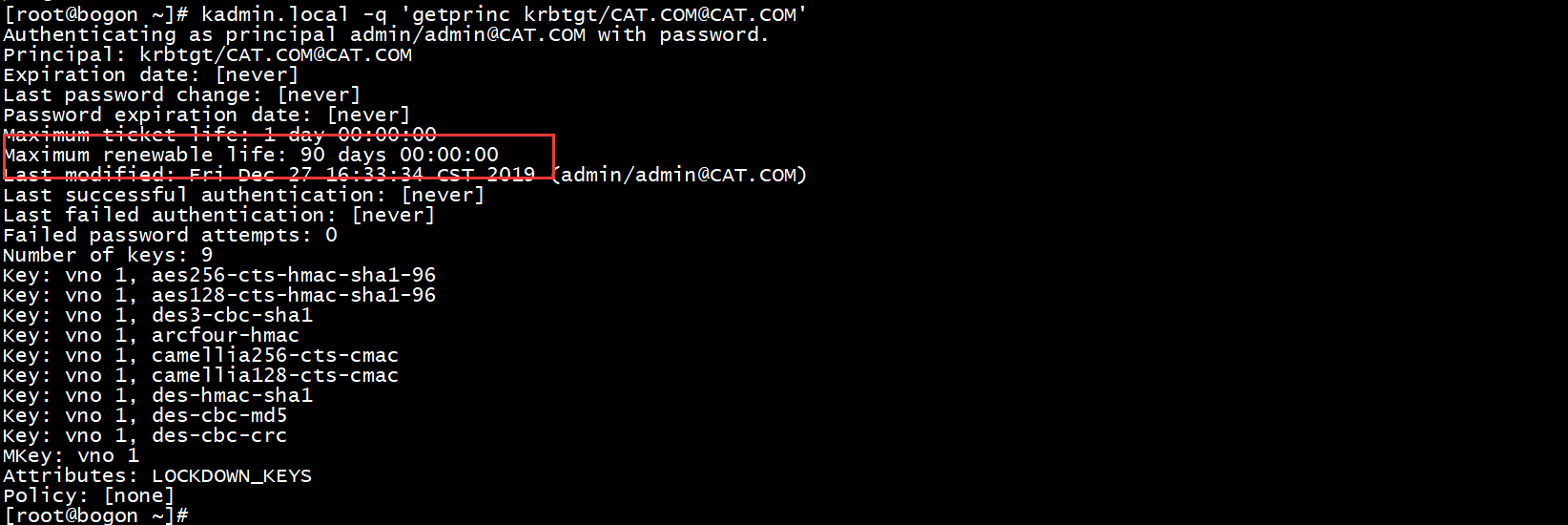

另外一个可能引起的问题是maxnewlisf配置有问题。

大部分导致的原因是maxnewlife时间是0

官方介绍

https://docs.cloudera.com/documentation/manager/5-1-x/Configuring-Hadoop-Security-with-Cloudera-Manager/cm5chs_enable_hue_sec_s10.html

命令查看maxnewlife的配置: kadmin.local -q 'getprinc krbtgt/CAT.COM@CAT.COM'

看是否为0,如果是0需要修改。

用两个命令修改,或者去配置文件/var/kerberos/krb5kdc/kdc.conf

修改maxnew life时间

modprinc -maxrenewlife 90day krbtgt/CAT.COM@CAT.COM

修改单个用户的maxnew life时间

modprinc -maxrenewlife 90day allow_renewable hue/h1.cat.com@CAT.COM

3.hive metastore问题:

原因是hive的keytab生成有误,metastore无法正常启动

解决办法:按照上面的步骤重新生成新的keytab,然后发送到所有机器即可

failure to login: for principal: hive/h1.cat.com@CAT.COM from keytab hive.keytab javax.security.auth.logi

n.LoginException: Checksum failed

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

2019-12-30 18:22:08,407 ERROR org.apache.hadoop.hive.metastore.HiveMetaStore: [main]: org.apache.thrift.transport.TTransportException: org.apache

.hadoop.security.KerberosAuthException: failure to login: for principal: hive/h1.cat.com@CAT.COM from keytab hive.keytab javax.security.auth.logi

n.LoginException: Checksum failed

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge$Server.<init>(HadoopThriftAuthBridge.java:327)

at org.apache.hadoop.hive.thrift.HadoopThriftAuthBridge.createServer(HadoopThriftAuthBridge.java:101)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:7291)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:7210)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: org.apache.hadoop.security.KerberosAuthException: failure to login: for principal: hive/h1.cat.com@CAT.COM from keytab hive.keytab jav

ax.security.auth.login.LoginException: Checksum failed

|

================================================================

其他总结

给新建用户分配权限步骤

1

2

3

4

5

6

7

|

[root@bogon ~]# kadmin.local -q "addprinc user1"

Authenticating as principal root/admin@CAT.COM with password.

WARNING: no policy specified for user1@CAT.COM; defaulting to no policy

Enter password for principal "user1@CAT.COM":

Re-enter password for principal "user1@CAT.COM":

Principal "user1@CAT.COM" created.

|

1

2

3

4

5

|

Su - user1

Kinit user1

输入上面设置的密码

klist查看有效期

|

other:

kdestroy 清理当前的凭证。或者是退出当前的kerberos用户

Kinit -R 续订

不同的用户在不同的机器的ticket的时间不同,因为取决于配置文件/etc/krb5.conf

必须在ticket有效期内续约才可以

一个机器,只能同时运行一个kerber用户,如果a用户登陆了user1 b用户登陆了user2那么user1的角色自动切换为user2

kadmin.local -q ‘getprinc krbtgt/CAT.COM@CAT.COM’ 查看配置情况

hue/h1.cat.com@CAT.COM

配置文件目录 /etc/krb5.conf,/var/kerberos/krb5kdc/kdc.conf

1

2

3

4

5

6

|

启动命令:

systemctl start krb5kdc

systemctl start kadmin

查看kerberos用户列表

`kadmin.local -q "listprincs"`

|

kerberos的记录就完成了。下一篇研究sentry

KDCserver和KDC admin server选择自己的KDC安装机器hostname

KDCserver和KDC admin server选择自己的KDC安装机器hostname

不勾选

不勾选

填写上面11步设置的用户和密码即可,然后一路确认。s

填写上面11步设置的用户和密码即可,然后一路确认。s

完成之后Kerberos会创建CDH相关用户,使用命令查看当前的所有kerberos用户,不过这些自动创建的用户不知道如何登陆,因为没有密码(后来发现要用不用角色的keytab登陆)

完成之后Kerberos会创建CDH相关用户,使用命令查看当前的所有kerberos用户,不过这些自动创建的用户不知道如何登陆,因为没有密码(后来发现要用不用角色的keytab登陆)